Chapter 3: Choosing LLM for Cline

Learn to pick the best LLM in Cline by balancing speed, context, cost, and flexibility—matching models to your specific tasks

"What is the best large language model for what I'm trying to accomplish in Cline?"

Beyond Benchmark Scores

The disconnect between benchmark performance and real-world effectiveness stems from the difference between controlled testing environments and the messy complexity of actual development work. Benchmarks test specific capabilities in isolation, but your Cline usage involves a complex interplay of factors that no single score can capture.

Consider a model that excels at coding benchmarks but struggles with tool usage. If your Cline setup relies heavily on MCP integrations for web scraping, documentation generation, or GitHub automation, that high-scoring model might deliver frustrating results. The skills required for effective MCP usage – understanding when to use which tool, formatting tool calls correctly, and chaining multiple tools together – aren't necessarily the same skills measured by coding benchmarks.

This is why the answer to "what's the best model" always begins with understanding your specific use cases and requirements.

The Speed Factor

One increasingly critical consideration is inference speed, measured in tokens per second. This metric indicates how quickly a model can process your requests and generate responses in Cline.

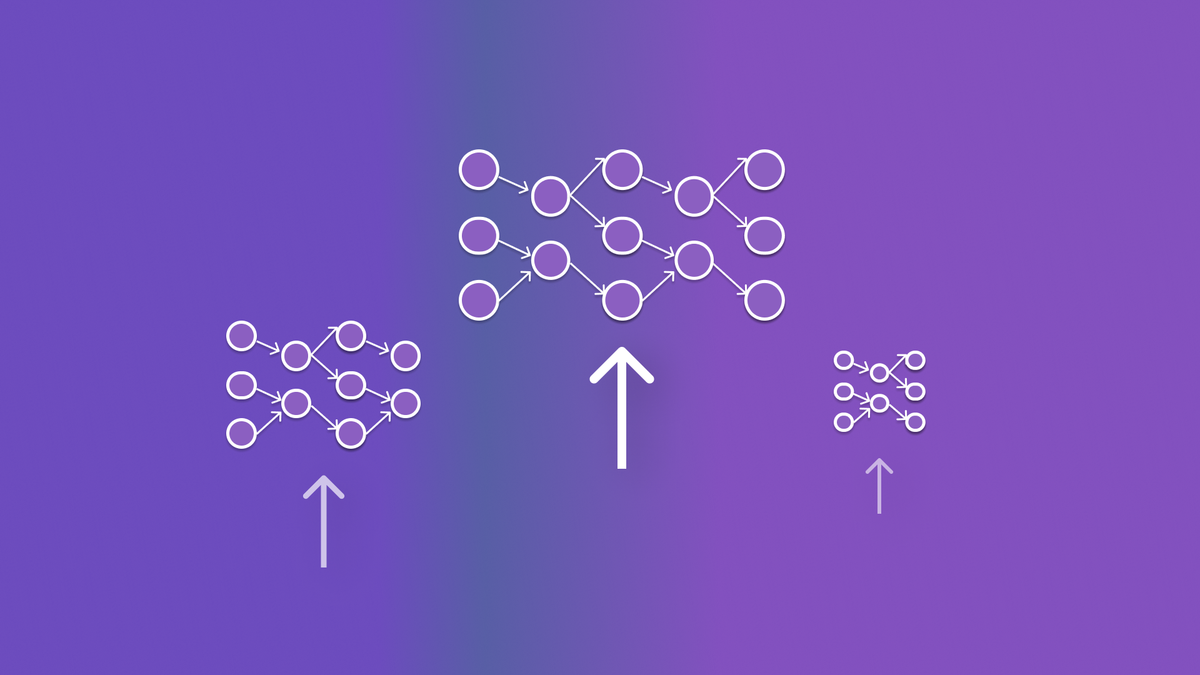

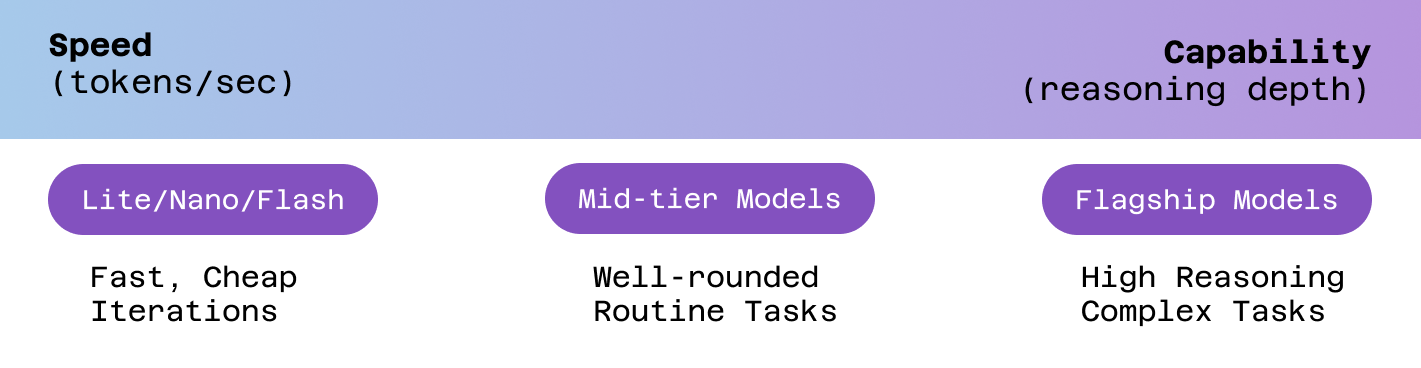

The relationship between model capability and speed often involves trade-offs. Smaller variants like "lite," "nano," "flash," or "mini" models typically deliver impressive speed but may sacrifice some sophistication in their reasoning. Flagship models designed for complex coding tasks usually provide more nuanced responses but at the cost of slower generation.

If rapid iteration is crucial to your workflow – perhaps you're doing exploratory coding, quick bug fixes, or working under tight deadlines – a fast model that delivers "good enough" results might serve you better than a slower model that produces marginally better code.

Speed becomes particularly important in interactive workflows where you're having extended conversations with Cline, making frequent small adjustments, or working through problems iteratively. The cumulative time savings from a faster model can significantly impact your productivity.

Context Window Considerations

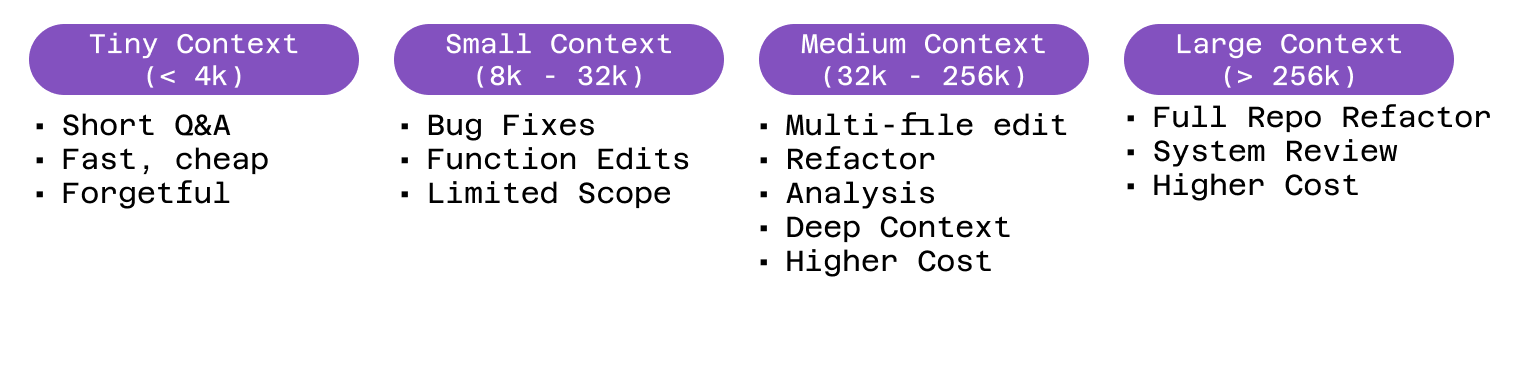

Some development tasks require extensive back-and-forth conversation, analysis of large files, or comprehensive exploration of complex codebases. These scenarios demand models with substantial context windows – the amount of information a model can keep in memory during a single session.

Tasks that benefit from large context windows include refactoring large applications, working with extensive documentation, debugging complex systems with many interconnected components, or any situation requiring significant agentic exploration due to project size and complexity.

Managing context effectively – known in the AI industry as context engineering – becomes a skill in itself. You need to understand not just how much context a model can handle, but how to structure your interactions to make the most effective use of that context.

Cline provides full visibility into both the context window size of each model and how much of that window you've consumed as you work through tasks. This transparency helps you make informed decisions about when to switch models or start fresh conversations to optimize performance.

The Cost-Performance Balance

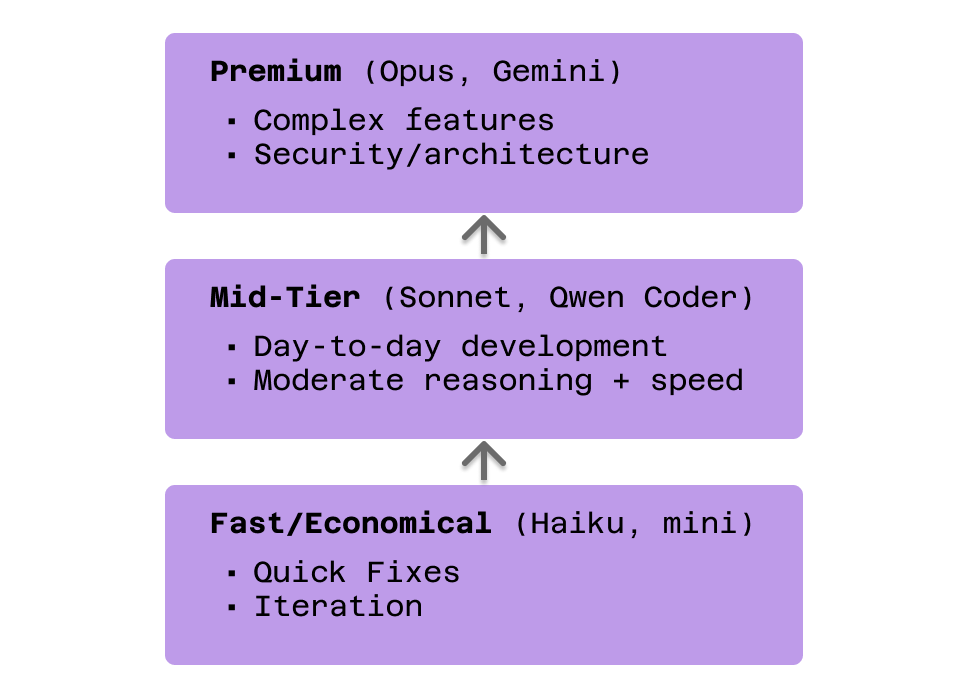

Cost management represents one of the most challenging aspects of model selection. While premium models like Anthropic's Claude Opus often deliver superior results across a wide range of tasks, the expense can quickly accumulate, especially for extensive development work.

The key lies in finding the optimal balance between performance and investment for your specific situation. This might mean using a premium model for complex architectural decisions while switching to a more economical option for routine maintenance tasks.

Cline's settings provide complete transparency about the cost structure of each model, showing both input and output token pricing. This visibility enables you to make informed decisions about when the additional capability of a premium model justifies its cost.

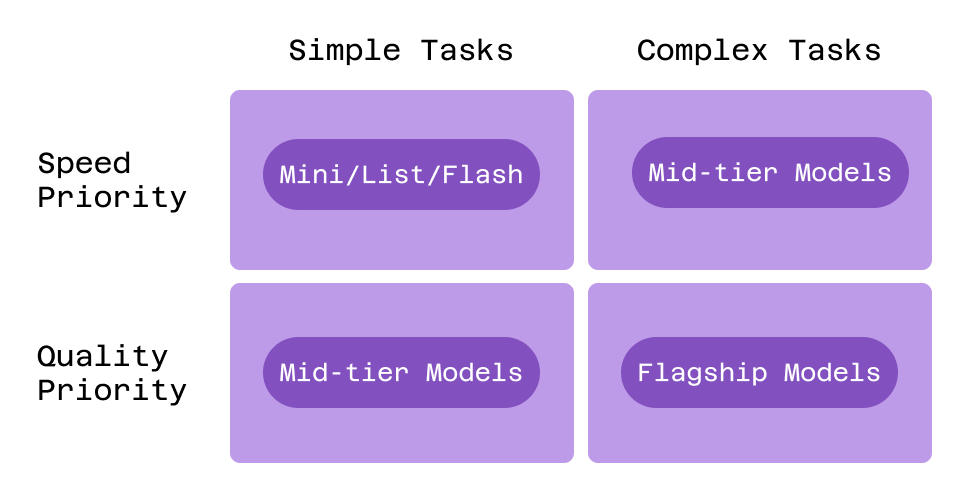

Some developers adopt a tiered approach: using fast, economical models for initial exploration and simple tasks, mid-tier models for standard development work, and premium models only for complex problems that require sophisticated reasoning.

The Flexibility Advantage

Cline's model-agnostic design means you're not locked into any single choice. You can experiment with different models, understand their strengths and limitations in your specific context, and develop a strategy that optimizes for your particular needs.

This flexibility extends to Cline's Plan and Act modes, where you can save different model preferences for different types of work. You might configure a fast model for planning sessions where you're exploring ideas and a more capable model for implementation phases where precision matters most.

The ability to switch models based on the task at hand transforms model selection from a one-time decision into an ongoing optimization strategy. You can match model capabilities to task requirements, adjusting your approach as your understanding of different models' strengths evolves.

A Task-Driven Approach

Returning to the original question – "What is the best large language model for what I'm trying to get done in Cline?" – the answer truly lies within the question itself. The best model is the one that most effectively accomplishes your specific objectives within your constraints of time, cost, and quality requirements.

This task-driven approach requires honest assessment of your priorities. Are you optimizing for speed, cost, quality, or some combination? Do your tasks require extensive tool usage, large context windows, or specialized domain knowledge? How important is consistency versus peak performance?

Understanding these priorities helps you navigate the trade-offs inherent in model selection. No model excels at everything, but the right model for your workflow excels at what matters most to your specific use cases.

The Path to Optimization

Effective model selection becomes an iterative process of experimentation and refinement. Start by identifying your most common Cline use cases and the factors that matter most for those tasks. Test promising models in your actual development environment, paying attention not just to the quality of results but also to speed, cost, and how well each model integrates with your workflow.

Over time, you'll develop intuitions about which models work best for different types of tasks. This experiential knowledge, combined with Cline's transparency about costs, context usage, and model capabilities, enables increasingly sophisticated optimization of your development workflow.

The goal isn't to find the single "best" model, but to develop a strategic approach to model selection that maximizes your productivity and effectiveness across the full range of your development activities.

Ready to optimize your model selection strategy? Start by analyzing your most common Cline use cases and experimenting with different models for each type of task.

For detailed guidance on model selection and workflow optimization, visit our documentation. Share your model selection strategies and learn from other developers' approaches on Reddit and Discord.