MiniMax M2.5 in Cline: Built for a World Where Agents Work Together

MiniMax just released M2.5. It's available now in Cline across VS Code, JetBrains, Zed, Neovim, Emacs, and the Cline CLI. MiniMax is offering M2.5 for free for a limited time, so there's no barrier to trying it out.

MiniMax just released M2.5. It's available now in Cline across VS Code, JetBrains, Zed, Neovim, Emacs, and the Cline CLI. MiniMax is offering M2.5 for free for a limited time, so there's no barrier to trying it out.

We spent time with it. Here's what we found.

What's new with M2.5

M2.5 builds on the coding strengths of M2.1 and pushes into new territory. The big shift is multi-agent design. MiniMax calls it the "agent-verse." Where M2.1 was a strong single-agent coding model, M2.5 was trained to work alongside other agents, context switching between different software environments and coordinating across tasks without losing track. Most models assume they're the only agent in the room. M2.5 assumes they're not.

The other major addition is workspace fluency. MiniMax trained M2.5 in real-world office environments, not just codebases. It handles Excel, Word, and PowerPoint natively, so it can move from writing code to building a spreadsheet to drafting a doc without switching models.

On the performance side: 100 tokens per second, roughly 3x faster than Opus. $0.30 per million input tokens, $0.06/M blended with caching. And it runs on 10B activated parameters, the smallest footprint of any model at this tier.

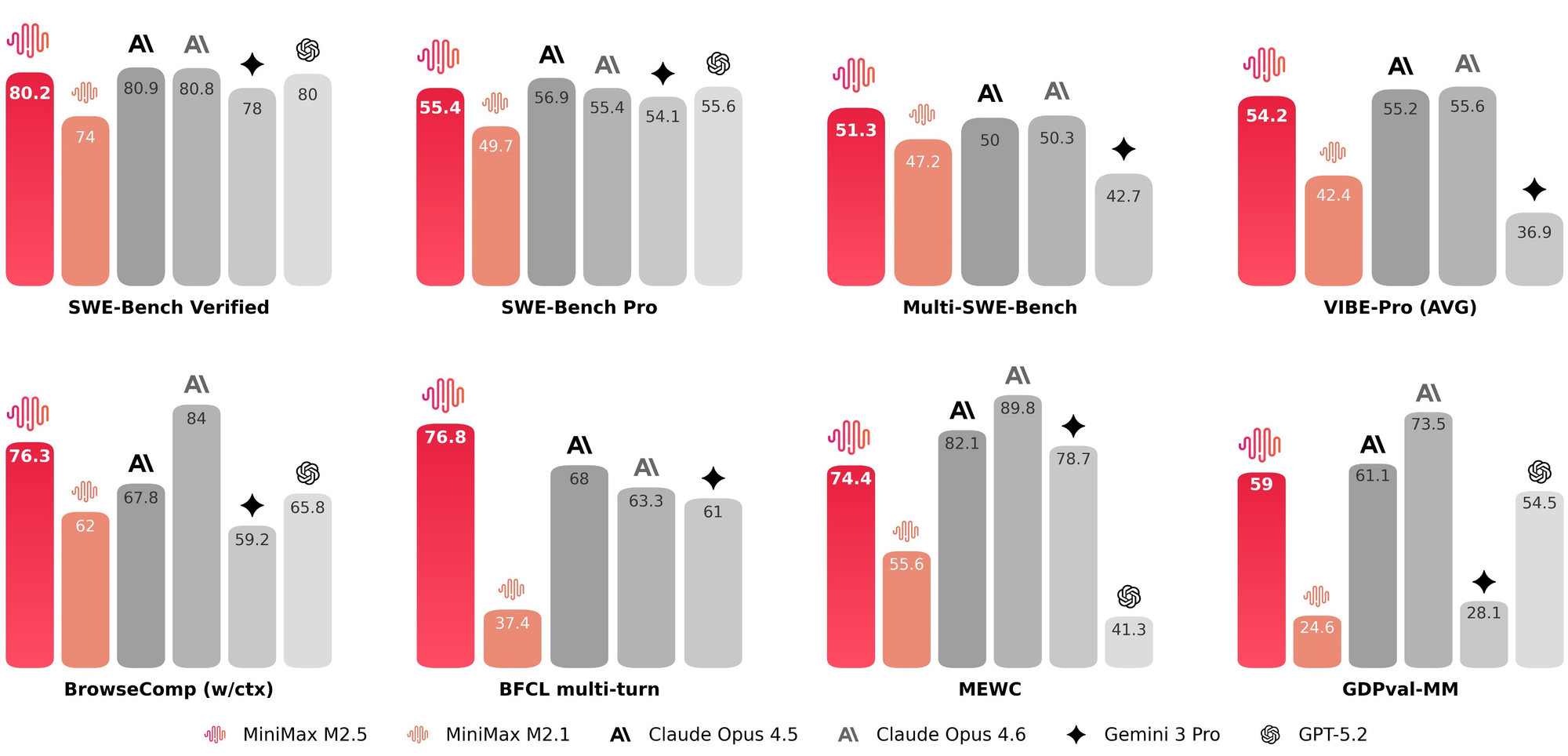

| Benchmark | M2.5 | M2.1 | Opus 4.6 | Gemini 3 Pro | GPT-5.2 |

|---|---|---|---|---|---|

| SWE-Bench Verified | 80.2 | 74.0 | 80.8 | 78.0 | 80.0 |

| SWE-Bench Pro | 55.4 | 49.7 | 53.4 | 54.0 | 54.0 |

| Terminal-Bench 2 | 51.7 | 47.9 | 55.1 | 54.0 | 54.0 |

| Multi-SWE-Bench | 51.3 | 47.2 | 50.3 | 42.7 | - |

| SWE-Bench Multilingual | 74.1 | 71.9 | 77.8 | 65.0 | 72.0 |

M2.5 beats Opus 4.6 on SWE-Bench Pro (55.4 vs 53.4), the harder benchmark with more complex real-world engineering tasks. It also edges ahead on Multi-SWE-Bench (51.3 vs 50.3), which tests multi-repo coordination.

Opus 4.6 still leads on terminal tasks (55.1 vs 51.7) and multilingual coding (77.8 vs 74.1). On SWE-Bench Verified, they're nearly tied at 80.2 and 80.8.

How it performs in Cline

Subagents

The latest release of Cline Subagents lets you run multiple agents on different parts of a task in parallel. M2.5 was trained for exactly this kind of setup, and it shows.

The model holds its context cleanly when other agents are working alongside it. It context switches between writing code, reviewing tests, and generating docs without mixing up which task it's on. It doesn't try to take over the entire problem. It stays in its lane, does its part, and hands off.

The cost is what makes this practical. Running three or four subagents in parallel with a model at this price point means you're not making tradeoffs about which tasks are worth delegating. You delegate all of them.

The CLI

This is where M2.5 stands out the most.

100 tokens per second is fast, but the real difference is in how the model uses those tokens. MiniMax optimized thinking efficiency, so M2.5 doesn't waste tokens deliberating when the path is clear. It plans, acts, and moves on. The output is clean. No over-explaining, no second-guessing.

With auto-approve enabled in the CLI, the pace changes how you work with it. You give it a task, it breaks it down, it executes. The feedback loop is tight enough that you stop batching tasks and start running them as they come up.

At $0.06/M blended, you can leave it running. That's the piece that makes the speed meaningful. Fast and expensive means you're still watching the meter. Fast and cheap at SOTA performance means always-on agents become a real option, not a theoretical one.

Beyond code

M2.5 handles Excel spreadsheets, Word documents, and PowerPoint presentations alongside code. If your workflow includes writing the code and then building the analysis spreadsheet or drafting the summary doc, you can keep the same agent on the full task without switching models.

If your work starts and ends in the codebase, this won't change much for you. But for workflows that span code and docs, it cuts out the context switch.

Self-hosting

10B activated parameters makes M2.5 the smallest model at this tier by a wide margin. For teams running Cline Enterprise with VPC deployments, this means SOTA-level coding performance on hardware that would have been undersized for previous generation models.

How to use it

Select minimax-m2.5 from the model picker in Cline. Works with your MiniMax API key.

In the extension: Available in VS Code, JetBrains, Zed, Neovim, and Emacs.

In the CLI: Update to the latest Cline CLI and select the model. Try it with auto-approve on a subagent workflow. That's where the speed and cost advantage is most obvious.