Models

Free Stealth Model "code-supernova" Now Available in Cline

A mysterious coding model built for agentic coding, "code-supernova", drops in Cline with free access during alpha

Models

A mysterious coding model built for agentic coding, "code-supernova", drops in Cline with free access during alpha

Models

How zAI's GLM Coding Plans change the economics of AI development -- $3/month gets you what otherwise costs $200

Updates

Cline is model agnostic, inference agnostic, and now platform agnostic -- running natively in JetBrains alongside VS Code. Your models, your providers, your IDE, no lock-in at any layer.

Updates

With LLMs multiplying fast, our new Fundamentals module helps you master model selection, tradeoffs, and coding workflows.

Beginners

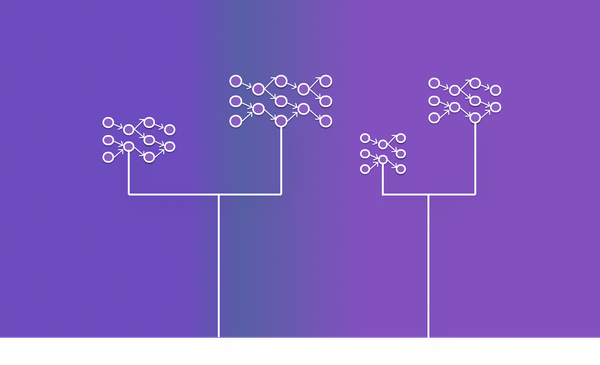

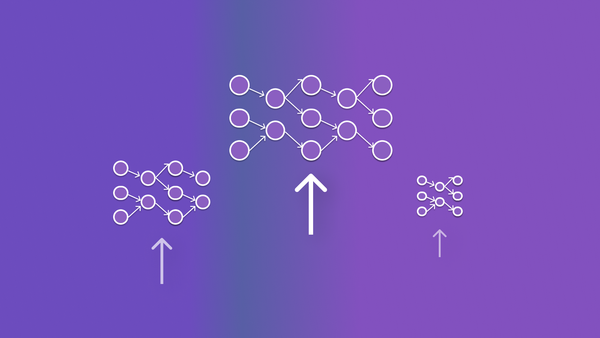

Explore how Cline’s model-agnostic design lets you route tasks via providers, aggregators, or local models—optimizing cost, speed & privacy.

Beginners

Learn to pick the best LLM in Cline by balancing speed, context, cost, and flexibility—matching models to your specific tasks

Learn

Learn how to interpret LLM benchmarks—coding, domain, and tool-use—to match scores with your development needs.

Learn

Learn how to choose the right LLM in Cline by understanding trade-offs in speed, cost, reasoning, and multimodality.

Updates

We’re launching AI Coding University to help developers build the core skills for agentic coding.

Updates

xAI extends free Grok access while we ship GPT-5 optimizations that actually matter for real coding tasks.

Learn

Cline works best when your ask is specific. In this blog we review strategies to prompt Cline in an effective way.

Learn

Learn how System Prompt Rules guide coding style, security, and workflow ensuring consistency, safety, and alignment in every task.